屏幕后处理效果(screen post-processing effects)是游戏中实现屏幕特效的常见方法。在本章中,我们将学习如何在 Unity 中利用渲染纹理来实现各种常见的屏幕后处理效果。

1 建立一个基本的屏幕后处理脚本系统 屏幕后处理,顾名思义,通常指的是在渲染完整个场景得到屏幕图像后,再对这个图像进行一系列操作,实现各种屏幕特效。使用这种技术,可以为游戏画面添加更多的艺术效果,例如景深、模糊等。

因此想要实现屏幕后处理的基础在于得到渲染后的屏幕图像,即抓取屏幕,Unity 为我们提供了一个方便的接口——OnRenderImage 函数,它的函数声明如下:

MonoBehaviour.OnRenderimage (RenderTexture src, RenderTexture dest)

当我们在脚本中声明此函数后,Unity 会把当前渲染得到的图像存储在第一个参数对应的源渲染纹理中,通过函数中的一系列操作后,再把目标渲染纹理,即第二个参数对应的渲染纹理显示到屏幕上。在 OnReoderlmage 函数中,通常是利用 Grapbics.Blit 函数来完成对渲染纹理的处理。它有 3 种函数声明:

1 2 3 public static void Blit (Texture src, RenderTexture dest) public static void Blit (Texture src, RenderTexture dest, Material mat, int pass = -1 ) public static void Blit (Texture src, Material mat, int pass = -1 )

其中,参数 src 对应了源纹理,在屏幕后处理技术中,这个参数通常就是当前屏幕的渲染纹理或是上一步处理后得到的渲染纹理。参数 dest 是目标渲染纹理,如果它的值为 null 就会直接将结果显示在屏幕上。参数 mat 是我们使用的材质,这个材质使用的 Unity Shader 将会进行各种屏幕后处理操作,而 src 纹理将会被传递给 Shader 中名为_MainTex 的纹理属性。参数 pass 的默认值为 -1,表示将会依次调用 Shader 内的所有 Pass 。否则,只会调用给定索引的 Pass 。

在默认情况下,OnRenderlmage 函数会在所有的不透明和透明的 Pass 执行完毕后被调用,以便对场景中所有游戏对象都产生影响。但有时,我们希望在不透明的 Pass(即渲染队列小于等于 2500 的 Pass,内置的 Background、 Geometry 和 AlphaTest 渲染队列均在此范围内)执行完毕后立即调用 OnRenderlmage 函数,从而不对透明物体产生任何影响。此时,我们可以在 OnRenderlmage 函数前添加 ImageEffectOpaque 属性来实现这样的目的,之后我们会遇到这种情况。

因此,要在 Unity 中实现屏幕后处理效果,过程通常如下:我们首先需要在摄像中添加一个用于屏幕后处理的脚本。在这个脚本中,我们会实现 OnRenderlmage 函数来获取当前屏幕的渲染纹理。然后,再调用 Graphics.Blit 函数使用特定的 Unity Shader 来对当前图像进行处理,再把返回的渲染纹理显示到屏幕上。对于一些复杂的屏幕特效,我们可能需要多次调用 Graphics.Blit 函数来对上一步的输出结果进行下一步处理。

但是,在进行屏幕后处理之前,我们需要检查一系列条件是否满足,例如当前平台是否支持渲染纹理和屏幕特效,是否支持当前使用的 Unity Shader 等。为此,我们创建了一个用于屏幕后处理效果的基类,在实现各种屏幕特效时,我们只需要继承自该基类,再实现派生类中不同的操作即可。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 using UnityEngine;using System.Collections;[ExecuteInEditMode ] [RequireComponent (typeof(Camera)) ] public class PostEffectsBase : MonoBehaviour { protected void CheckResources () bool isSupported = CheckSupport(); if (isSupported == false ) { NotSupported(); } } protected bool CheckSupport () if (SystemInfo.supportsImageEffects == false || SystemInfo.supportsRenderTextures == false ) { Debug.LogWarning("This platform does not support image effects or render textures." ); return false ; } return true ; } protected void NotSupported () enabled = false ; } protected void Start () CheckResources(); } protected Material CheckShaderAndCreateMaterial (Shader shader, Material material ) if (shader == null ) { return null ; } if (shader.isSupported && material && material.shader == shader) return material; if (!shader.isSupported) { return null ; } else { material = new Material(shader); material.hideFlags = HideFlags.DontSave; if (material) return material; else return null ; } } }

后面我们就可以通过继承这个基类来实现一些屏幕后处理效果。

2 调整屏幕的亮度、饱和度和对比度 首先来编写 C# 脚本,继承上面的基类:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 using UnityEngine;using System.Collections;public class BrightnessSaturationAndContrast : PostEffectsBase { public Shader briSatConShader; private Material briSatConMaterial; public Material material { get { briSatConMaterial = CheckShaderAndCreateMaterial(briSatConShader, briSatConMaterial); return briSatConMaterial; } } [Range(0.0f, 3.0f) ] public float brightness = 1.0f ; [Range(0.0f, 3.0f) ] public float saturation = 1.0f ; [Range(0.0f, 3.0f) ] public float contrast = 1.0f ; void OnRenderImage (RenderTexture src, RenderTexture dest ) if (material != null ) { material.SetFloat("_Brightness" , brightness); material.SetFloat("_Saturation" , saturation); material.SetFloat("_Contrast" , contrast); Graphics.Blit(src, dest, material); } else { Graphics.Blit(src, dest); } } }

然后来编写 Shader:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 Shader "Unity Shaders Book/Chapter 12 /Brightness Saturation And Contrast" { Properties { _MainTex ("Base (RGB)", 2 D) = "white" {} _Brightness ("Brightness", Float) = 1 _Saturation("Saturation", Float) = 1 _Contrast("Contrast", Float) = 1 } SubShader { Pass { ZTest Always Cull Off ZWrite Off CGPROGRAM #pragma vertex vert #pragma fragment frag #include "UnityCG.cginc" sampler2D _MainTex; half _Brightness; half _Saturation; half _Contrast; struct v2f { float4 pos : SV_POSITION; half2 uv: TEXCOORD0; }; v2f vert(appdata_img v) { v2f o; o.pos = UnityObjectToClipPos(v.vertex); o.uv = v.texcoord; return o; } fixed4 frag(v2f i) : SV_Target { fixed4 renderTex = tex2D(_MainTex, i.uv); fixed3 finalColor = renderTex.rgb * _Brightness; fixed luminance = 0.2125 * renderTex.r + 0.7154 * renderTex.g + 0.0721 * renderTex.b; fixed3 luminanceColor = fixed3(luminance, luminance, luminance); finalColor = lerp(luminanceColor, finalColor, _Saturation); fixed3 avgColor = fixed3(0.5 , 0.5 , 0.5 ); finalColor = lerp(avgColor, finalColor, _Contrast); return fixed4(finalColor, renderTex.a); } ENDCG } } Fallback Off }

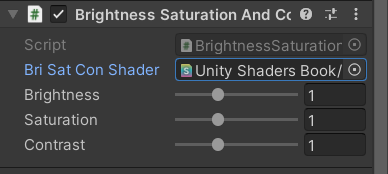

现在返回 Unity 中,将 cs 脚本赋给摄像机,然后在摄像机属性面板中的脚本组件中将上面的 Shader 赋给 Bri Sat Con Shader 属性:

然后调整各个参数就可以调整屏幕效果,原图如下:

调整部分参数后:

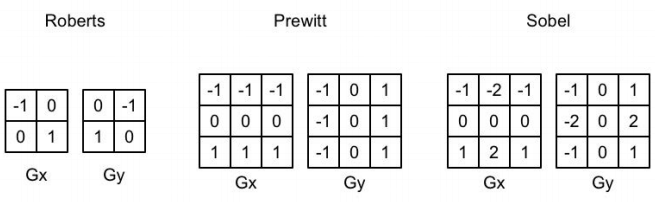

3 边缘检测 边缘检测是一个常见的屏幕后处理效果,用于实现描边效果。常用的边缘检测算子有:

在进行边缘检测时,我们需要对每个像素分别进行一次卷积计算,得到两个方向上的梯度值 $G_x$ 和 $G_y$,而整体的梯度可按下面的公式计算而得:

下面我们使用 Sobel 算子进行边缘检测,cs 脚本和上面类似:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 using UnityEngine;using System.Collections;public class EdgeDetection : PostEffectsBase { public Shader edgeDetectShader; private Material edgeDetectMaterial = null ; public Material material { get { edgeDetectMaterial = CheckShaderAndCreateMaterial(edgeDetectShader, edgeDetectMaterial); return edgeDetectMaterial; } } [Range(0.0f, 1.0f) ] public float edgesOnly = 0.0f ; public Color edgeColor = Color.black; public Color backgroundColor = Color.white; void OnRenderImage (RenderTexture src, RenderTexture dest ) if (material != null ) { material.SetFloat("_EdgeOnly" , edgesOnly); material.SetColor("_EdgeColor" , edgeColor); material.SetColor("_BackgroundColor" , backgroundColor); Graphics.Blit(src, dest, material); } else { Graphics.Blit(src, dest); } } }

然后编写 Shader:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 Shader "Unity Shaders Book/Chapter 12 /Edge Detection" { Properties { _MainTex ("Base (RGB)", 2 D) = "white" {} _EdgeOnly ("Edge Only", Float) = 1.0 _EdgeColor ("Edge Color", Color) = (0 , 0 , 0 , 1 ) _BackgroundColor ("Background Color", Color) = (1 , 1 , 1 , 1 ) } SubShader { Pass { ZTest Always Cull Off ZWrite Off CGPROGRAM #include "UnityCG.cginc" #pragma vertex vert #pragma fragment fragSobel sampler2D _MainTex; uniform half4 _MainTex_TexelSize; fixed _EdgeOnly; fixed4 _EdgeColor; fixed4 _BackgroundColor; struct v2f { float4 pos : SV_POSITION; half2 uv[9 ] : TEXCOORD0; }; v2f vert(appdata_img v) { v2f o; o.pos = UnityObjectToClipPos(v.vertex); half2 uv = v.texcoord; o.uv[0 ] = uv + _MainTex_TexelSize.xy * half2(-1 , -1 ); o.uv[1 ] = uv + _MainTex_TexelSize.xy * half2(0 , -1 ); o.uv[2 ] = uv + _MainTex_TexelSize.xy * half2(1 , -1 ); o.uv[3 ] = uv + _MainTex_TexelSize.xy * half2(-1 , 0 ); o.uv[4 ] = uv + _MainTex_TexelSize.xy * half2(0 , 0 ); o.uv[5 ] = uv + _MainTex_TexelSize.xy * half2(1 , 0 ); o.uv[6 ] = uv + _MainTex_TexelSize.xy * half2(-1 , 1 ); o.uv[7 ] = uv + _MainTex_TexelSize.xy * half2(0 , 1 ); o.uv[8 ] = uv + _MainTex_TexelSize.xy * half2(1 , 1 ); return o; } fixed luminance(fixed4 color) { return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b; } half Sobel(v2f i) { const half Gx[9 ] = {-1 , 0 , 1 , -2 , 0 , 2 , -1 , 0 , 1 }; const half Gy[9 ] = {-1 , -2 , -1 , 0 , 0 , 0 , 1 , 2 , 1 }; half texColor; half edgeX = 0 ; half edgeY = 0 ; for (int it = 0 ; it < 9 ; it++) { texColor = luminance(tex2D(_MainTex, i.uv[it])); edgeX += texColor * Gx[it]; edgeY += texColor * Gy[it]; } half edge = 1 - abs (edgeX) - abs (edgeY); return edge; } fixed4 fragSobel(v2f i) : SV_Target { half edge = Sobel(i); fixed4 withEdgeColor = lerp(_EdgeColor, tex2D(_MainTex, i.uv[4 ]), edge); fixed4 onlyEdgeColor = lerp(_EdgeColor, _BackgroundColor, edge); return lerp(withEdgeColor, onlyEdgeColor, _EdgeOnly); } ENDCG } } FallBack Off }

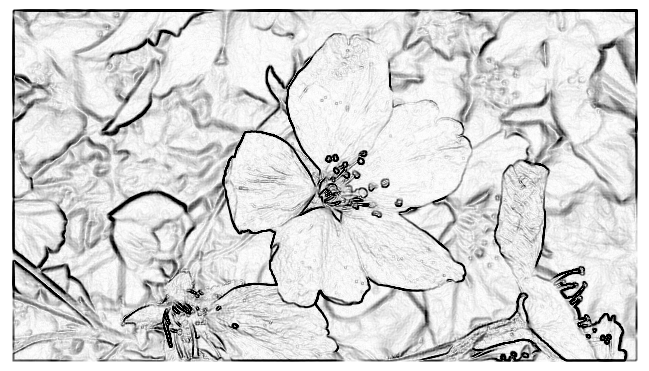

_EdgeOnly 为 0 时的效果如下:

_EdgeOnly 为 1 时的效果如下:

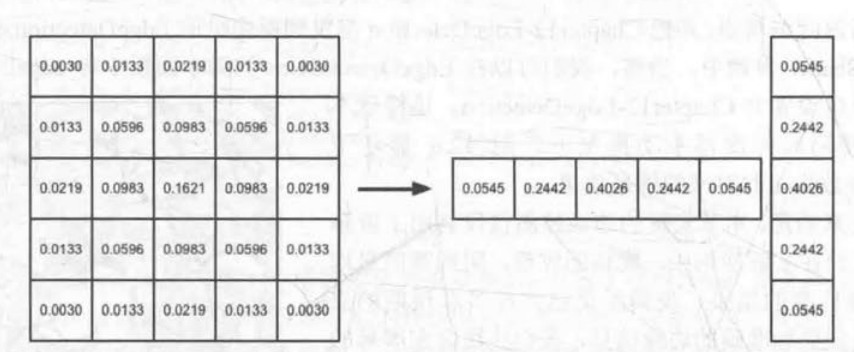

4 高斯模糊 高斯模糊我们非常熟悉,但还是有一个小技巧需要说明,那就是存储高斯模糊核时不需要全部存储,只需要存储极少的权重即可,因为:

所以一个 5 * 5 的高斯模糊核我们只需要存储 3 个权重即可。

并且为了提高性能,我们使用两个 Pass,第一个 Pass 将会使用竖直方向的一维高斯核对图像进行滤波,第二个 Pass 再使用水平方向的一维高斯核对图像进行滤波,得到最终的目标图像。同时还将利用图像缩放来进一步提高性能,并通过调整高斯滤波的应用次数来控制模糊程度。

cs 脚本如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 using UnityEngine;using System.Collections;public class GaussianBlur : PostEffectsBase { public Shader gaussianBlurShader; private Material gaussianBlurMaterial = null ; public Material material { get { gaussianBlurMaterial = CheckShaderAndCreateMaterial(gaussianBlurShader, gaussianBlurMaterial); return gaussianBlurMaterial; } } [Range(0, 4) ] public int iterations = 3 ; [Range(0.2f, 3.0f) ] public float blurSpread = 0.6f ; [Range(1, 8) ] public int downSample = 2 ; void OnRenderImage (RenderTexture src, RenderTexture dest ) if (material != null ) { int rtW = src.width/downSample; int rtH = src.height/downSample; RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0 ); buffer0.filterMode = FilterMode.Bilinear; Graphics.Blit(src, buffer0); for (int i = 0 ; i < iterations; i++) { material.SetFloat("_BlurSize" , 1.0f + i * blurSpread); RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0 ); Graphics.Blit(buffer0, buffer1, material, 0 ); RenderTexture.ReleaseTemporary(buffer0); buffer0 = buffer1; buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0 ); Graphics.Blit(buffer0, buffer1, material, 1 ); RenderTexture.ReleaseTemporary(buffer0); buffer0 = buffer1; } Graphics.Blit(buffer0, dest); RenderTexture.ReleaseTemporary(buffer0); } else { Graphics.Blit(src, dest); } } }

Shader 代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 Shader "Unity Shaders Book/Chapter 12 /Gaussian Blur" { Properties { _MainTex ("Base (RGB)", 2 D) = "white" {} _BlurSize ("Blur Size", Float) = 1.0 } SubShader { CGINCLUDE #include "UnityCG.cginc" sampler2D _MainTex; half4 _MainTex_TexelSize; float _BlurSize; struct v2f { float4 pos : SV_POSITION; half2 uv[5 ]: TEXCOORD0; }; v2f vertBlurVertical(appdata_img v) { v2f o; o.pos = UnityObjectToClipPos(v.vertex); half2 uv = v.texcoord; o.uv[0 ] = uv; o.uv[1 ] = uv + float2(0.0 , _MainTex_TexelSize.y * 1.0 ) * _BlurSize; o.uv[2 ] = uv - float2(0.0 , _MainTex_TexelSize.y * 1.0 ) * _BlurSize; o.uv[3 ] = uv + float2(0.0 , _MainTex_TexelSize.y * 2.0 ) * _BlurSize; o.uv[4 ] = uv - float2(0.0 , _MainTex_TexelSize.y * 2.0 ) * _BlurSize; return o; } v2f vertBlurHorizontal(appdata_img v) { v2f o; o.pos = UnityObjectToClipPos(v.vertex); half2 uv = v.texcoord; o.uv[0 ] = uv; o.uv[1 ] = uv + float2(_MainTex_TexelSize.x * 1.0 , 0.0 ) * _BlurSize; o.uv[2 ] = uv - float2(_MainTex_TexelSize.x * 1.0 , 0.0 ) * _BlurSize; o.uv[3 ] = uv + float2(_MainTex_TexelSize.x * 2.0 , 0.0 ) * _BlurSize; o.uv[4 ] = uv - float2(_MainTex_TexelSize.x * 2.0 , 0.0 ) * _BlurSize; return o; } fixed4 fragBlur(v2f i) : SV_Target { float weight[3 ] = {0.4026 , 0.2442 , 0.0545 }; fixed3 sum = tex2D(_MainTex, i.uv[0 ]).rgb * weight[0 ]; for (int it = 1 ; it < 3 ; it++) { sum += tex2D(_MainTex, i.uv[it*2 -1 ]).rgb * weight[it]; sum += tex2D(_MainTex, i.uv[it*2 ]).rgb * weight[it]; } return fixed4(sum, 1.0 ); } ENDCG ZTest Always Cull Off ZWrite Off Pass { NAME "GAUSSIAN_BLUR_VERTICAL" CGPROGRAM #pragma vertex vertBlurVertical #pragma fragment fragBlur ENDCG } Pass { NAME "GAUSSIAN_BLUR_HORIZONTAL" CGPROGRAM #pragma vertex vertBlurHorizontal #pragma fragment fragBlur ENDCG } } FallBack "Diffuse" }

渲染效果如下:

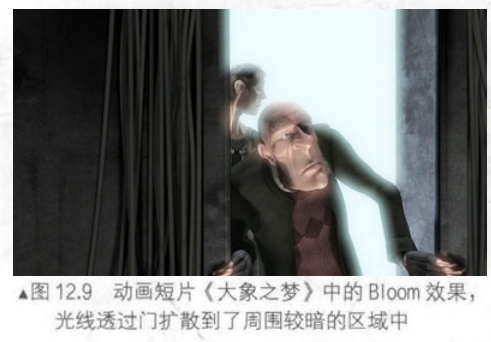

5 Bloom 效果 Bloom 特效是游戏中常见的一种屏幕效果。这种特效可以模拟真实摄像机的一种图像效果,它让画面中较亮的区域“扩散”到周围的区域中,造成一种朦胧的效果。如下图:

Bloom 的实现原理非常简单:我们首先根据一个阈值提取出图像中的较亮区域,把它们存储在一张渲染纹理中,再利用高斯模糊对这张渲染纹理进行模糊处理,模拟光线扩散的效果,最后再将其和原图像进行混合,得到最终的效果。

首先是 cs 脚本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 using UnityEngine;using System.Collections;public class Bloom : PostEffectsBase { public Shader bloomShader; private Material bloomMaterial = null ; public Material material { get { bloomMaterial = CheckShaderAndCreateMaterial(bloomShader, bloomMaterial); return bloomMaterial; } } [Range(0, 4) ] public int iterations = 3 ; [Range(0.2f, 3.0f) ] public float blurSpread = 0.6f ; [Range(1, 8) ] public int downSample = 2 ; [Range(0.0f, 4.0f) ] public float luminanceThreshold = 0.6f ; void OnRenderImage (RenderTexture src, RenderTexture dest ) if (material != null ) { material.SetFloat("_LuminanceThreshold" , luminanceThreshold); int rtW = src.width/downSample; int rtH = src.height/downSample; RenderTexture buffer0 = RenderTexture.GetTemporary(rtW, rtH, 0 ); buffer0.filterMode = FilterMode.Bilinear; Graphics.Blit(src, buffer0, material, 0 ); for (int i = 0 ; i < iterations; i++) { material.SetFloat("_BlurSize" , 1.0f + i * blurSpread); RenderTexture buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0 ); Graphics.Blit(buffer0, buffer1, material, 1 ); RenderTexture.ReleaseTemporary(buffer0); buffer0 = buffer1; buffer1 = RenderTexture.GetTemporary(rtW, rtH, 0 ); Graphics.Blit(buffer0, buffer1, material, 2 ); RenderTexture.ReleaseTemporary(buffer0); buffer0 = buffer1; } material.SetTexture ("_Bloom" , buffer0); Graphics.Blit (src, dest, material, 3 ); RenderTexture.ReleaseTemporary(buffer0); } else { Graphics.Blit(src, dest); } } }

然后是 Shader 代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 Shader "Unity Shaders Book/Chapter 12 /Bloom" { Properties { _MainTex ("Base (RGB)", 2 D) = "white" {} _Bloom ("Bloom (RGB)", 2 D) = "black" {} _LuminanceThreshold ("Luminance Threshold", Float) = 0.5 _BlurSize ("Blur Size", Float) = 1.0 } SubShader { CGINCLUDE #include "UnityCG.cginc" sampler2D _MainTex; half4 _MainTex_TexelSize; sampler2D _Bloom; float _LuminanceThreshold; float _BlurSize; struct v2f { float4 pos : SV_POSITION; half2 uv : TEXCOORD0; }; v2f vertExtractBright(appdata_img v) { v2f o; o.pos = UnityObjectToClipPos(v.vertex); o.uv = v.texcoord; return o; } fixed luminance(fixed4 color) { return 0.2125 * color.r + 0.7154 * color.g + 0.0721 * color.b; } fixed4 fragExtractBright(v2f i) : SV_Target { fixed4 c = tex2D(_MainTex, i.uv); fixed val = clamp (luminance(c) - _LuminanceThreshold, 0.0 , 1.0 ); return c * val; } struct v2fBloom { float4 pos : SV_POSITION; half4 uv : TEXCOORD0; }; v2fBloom vertBloom(appdata_img v) { v2fBloom o; o.pos = UnityObjectToClipPos (v.vertex); o.uv.xy = v.texcoord; o.uv.zw = v.texcoord; #if UNITY_UV_STARTS_AT_TOP if (_MainTex_TexelSize.y < 0.0 ) o.uv.w = 1.0 - o.uv.w; #endif return o; } fixed4 fragBloom(v2fBloom i) : SV_Target { return tex2D(_MainTex, i.uv.xy) + tex2D(_Bloom, i.uv.zw); } ENDCG ZTest Always Cull Off ZWrite Off Pass { CGPROGRAM #pragma vertex vertExtractBright #pragma fragment fragExtractBright ENDCG } UsePass "Unity Shaders Book/Chapter 12 /Gaussian Blur/GAUSSIAN_BLUR_VERTICAL" UsePass "Unity Shaders Book/Chapter 12 /Gaussian Blur/GAUSSIAN_BLUR_HORIZONTAL" Pass { CGPROGRAM #pragma vertex vertBloom #pragma fragment fragBloom ENDCG } } FallBack Off }

原图如下:

Bloom 效果如下:

6 运动模糊 运动模糊是真实世界中的摄像机的一种效果。如果在摄像机曝光时,拍摄场景发生了变化,就会产生模糊的画面。运动模糊在我们的日常生活中是非常常见的,运动模糊效果可以让物体运动看起来更加真实平滑,但在计算机产生的图像中,由于不存在曝光这一物理现象,渲染出来的图像往往都棱角分明,缺少运动模糊。而在现在的许多第一人称或者赛车游戏中,运动模糊是一种必不可少的效果。

运动模糊实现的一种方法是使用累积缓存 记录多张连续图像,然后取平均作为运动模糊图像,但是这种方法对于性能的消耗很大,因为想要获取多帧连续图像意味着要渲染一个场景多次。另一种广泛应用的方法是创建和使用速度缓存 ,这个缓存中存储了各个像素当前的运动速度,然后利用该值来决定模糊的方向和大小。

这一节我们使用类似于第一种方法来实现运动模糊,但不需要在一帧中把场景渲染多次,只要保存之前的渲染结果,不断把当前的渲染图像叠加到之前的渲染图上,从而产生一种运动轨迹的视觉效果。这种方法与原始的利用累积缓存的方法相比性能更好,但模糊效果可能会略有影响。

为此,我们先编写一个脚本让摄像机运动:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 using UnityEngine;using System.Collections;public class Translating : MonoBehaviour { public float speed = 10.0f ; public Vector3 startPoint = Vector3.zero; public Vector3 endPoint = Vector3.zero; public Vector3 lookAt = Vector3.zero; public bool pingpong = true ; private Vector3 curEndPoint = Vector3.zero; void Start () transform.position = startPoint; curEndPoint = endPoint; } void Update () transform.position = Vector3.Slerp(transform.position, curEndPoint, Time.deltaTime * speed); transform.LookAt(lookAt); if (pingpong) { if (Vector3.Distance(transform.position, curEndPoint) < 0.001f ) { curEndPoint = Vector3.Distance(curEndPoint, endPoint) < Vector3.Distance(curEndPoint, startPoint) ? startPoint : endPoint; } } } }

然后编写运动模糊的脚本:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 using UnityEngine;using System.Collections;public class MotionBlur : PostEffectsBase { public Shader motionBlurShader; private Material motionBlurMaterial = null ; public Material material { get { motionBlurMaterial = CheckShaderAndCreateMaterial(motionBlurShader, motionBlurMaterial); return motionBlurMaterial; } } [Range(0.0f, 0.9f) ] public float blurAmount = 0.5f ; private RenderTexture accumulationTexture; void OnDisable () DestroyImmediate(accumulationTexture); } void OnRenderImage (RenderTexture src, RenderTexture dest ) if (material != null ) { if (accumulationTexture == null || accumulationTexture.width != src.width || accumulationTexture.height != src.height) { DestroyImmediate(accumulationTexture); accumulationTexture = new RenderTexture(src.width, src.height, 0 ); accumulationTexture.hideFlags = HideFlags.HideAndDontSave; Graphics.Blit(src, accumulationTexture); } accumulationTexture.MarkRestoreExpected(); material.SetFloat("_BlurAmount" , 1.0f - blurAmount); Graphics.Blit (src, accumulationTexture, material); Graphics.Blit (accumulationTexture, dest); } else { Graphics.Blit(src, dest); } } }

Shader 代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 Shader "Unity Shaders Book/Chapter 12 /Motion Blur" { Properties { _MainTex ("Base (RGB)", 2 D) = "white" {} _BlurAmount ("Blur Amount", Float) = 1.0 } SubShader { CGINCLUDE #include "UnityCG.cginc" sampler2D _MainTex; fixed _BlurAmount; struct v2f { float4 pos : SV_POSITION; half2 uv : TEXCOORD0; }; v2f vert(appdata_img v) { v2f o; o.pos = UnityObjectToClipPos(v.vertex); o.uv = v.texcoord; return o; } fixed4 fragRGB (v2f i) : SV_Target { return fixed4(tex2D(_MainTex, i.uv).rgb, _BlurAmount); } half4 fragA (v2f i) : SV_Target { return tex2D(_MainTex, i.uv); } ENDCG ZTest Always Cull Off ZWrite Off Pass { Blend SrcAlpha OneMinusSrcAlpha ColorMask RGB CGPROGRAM #pragma vertex vert #pragma fragment fragRGB ENDCG } Pass { Blend One Zero ColorMask A CGPROGRAM #pragma vertex vert #pragma fragment fragA ENDCG } } FallBack Off }

Blur Amount 设置为 0 时的效果:

Blur Amount 设置为 1 时的效果:

明显看到了运动模糊。当然这只是一种简单的实现,当物体运动速度过快时,这种方法可能会造成单独的帧图像变得可见。之后我们会学习如何利用深度纹理重建速度来模拟运动模糊效果。